Modeling co-tenant risk for cloud services

Co-tenant risk modeling for cloud services

This talk covers the creation of models to represent co-tenant risk for cloud services. It was presented at the Melbourne ISC² chapter meeting 2021-07.

DES Cipher visualisations

DES 64-bit block, 56-bit key Key standard is 64bit, 8 bits for parity not keying Five modes of operation ECB - Electronic Code Book CBC - Cipher Block Chaining CFB - Cipher Feedback OFB - Output Feedback CTR - Counter 64bits of plaintext generate 64bits of ciphertext DES - Electronic Code Book Mode DES - CBC - Cipher Bock Chaining Mode DES - CFB - Cipher Feedback Mode DES - OFB - Output Feedback Mode DES - CTR - Counter Mode 3DES DES-EEE3

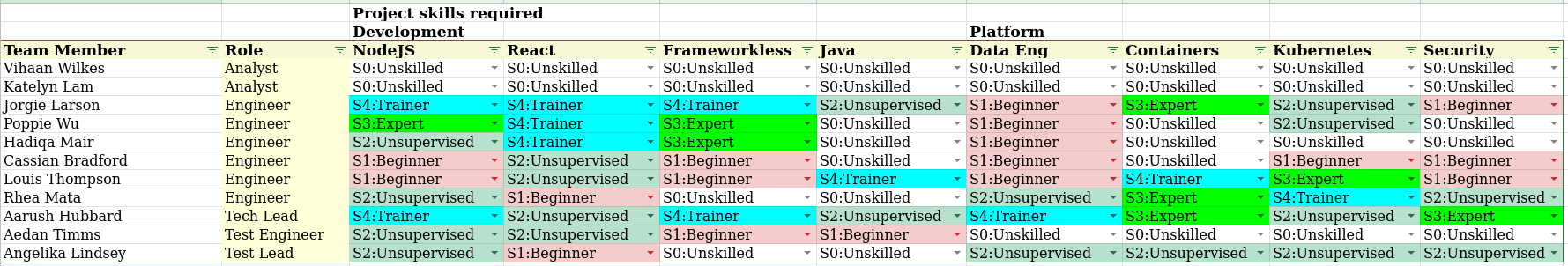

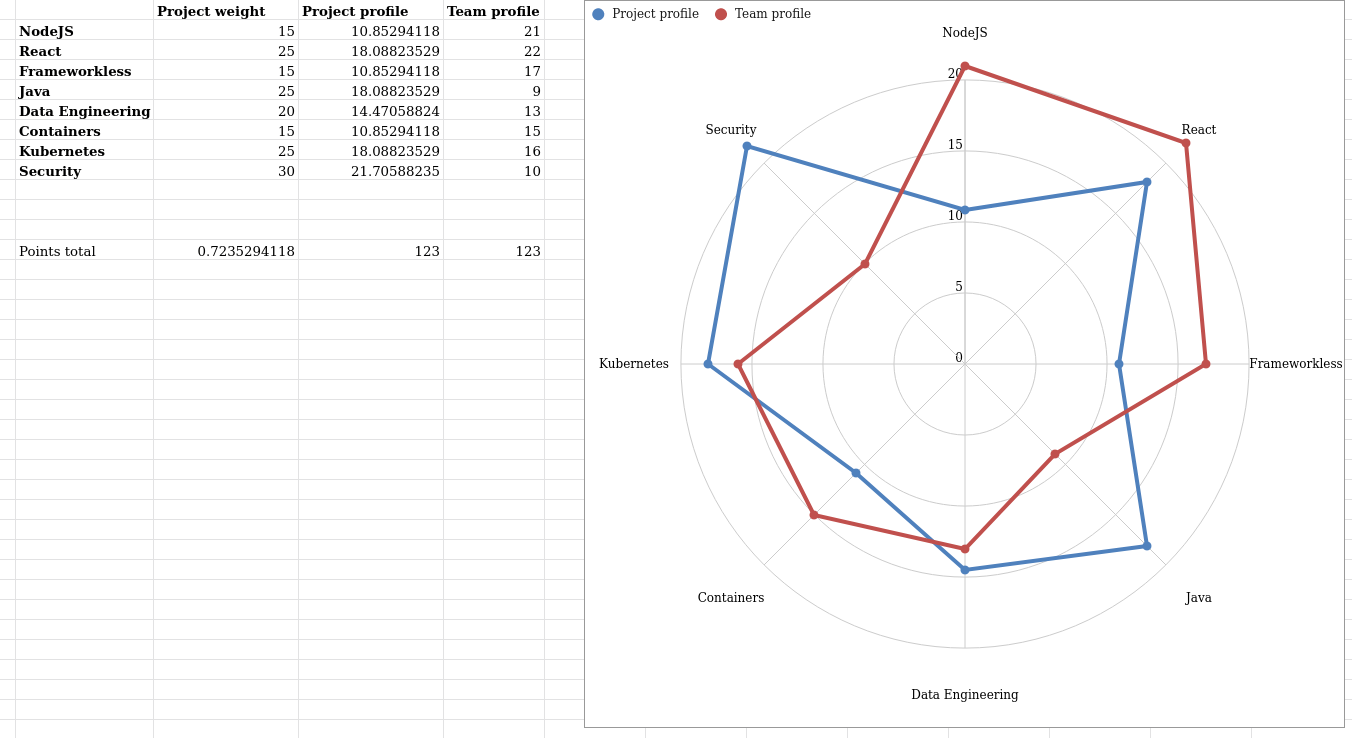

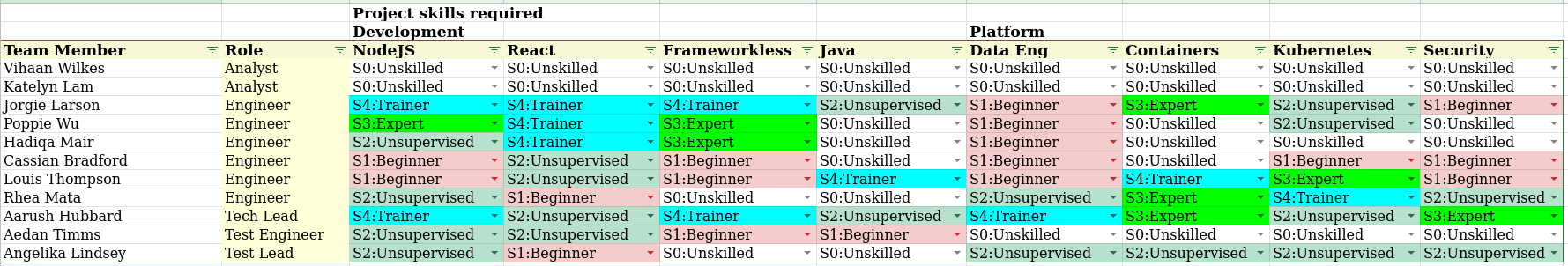

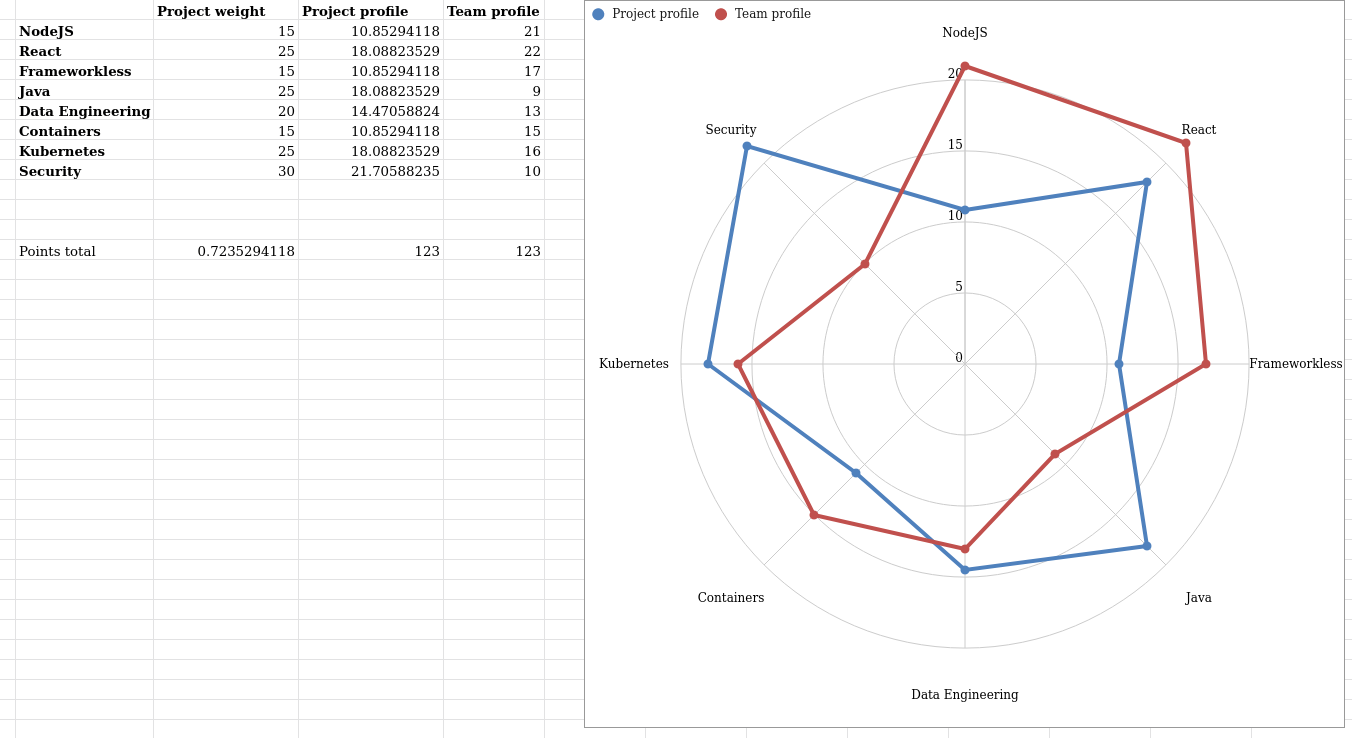

Skill tree and matrix example

Goals

- Understand team composition match with project requirements

- Identify gaps, risks

- Create individual learning paths for team members

Step 1 - Create a Skill Tree

Step 2 - Fill in Skill Matrix

Step 3 - Report against project profile

Note : this provides relative focus information, not absolute capacity data.

Example

Download example spreadsheet,

Names and data generated randomly.

Intel/AMD virtualization isolation and containment

Notes This is the second part of a series. Read Part 1 - Process Isolation and Containment Unless mentioned otherwise I will be referring to Intel and Linux architecture Virtual hardware The key capability that enables cloud computing is the ability to separate computational activity from physical devices. This is generally referred to as virtualization.

The Popek and Goldberg Virtualization requirements are captured at a high level by,

Virtualization constructs isomorphism from guest to host, by implementing functions V() and E() All guest state S is mapped onto host state S’ through a function V(S) For every state change operation E(S) in the guest is a corresponding state change E’(S’) in the host In our case we are looking for a host Intel x86 system S' to securely and efficiently have the state of a guest Intel x86 system S mapped to it.

Remote API checklist

An API should Be aligned with the business vision and roadmap Adopt a client-first approach for developers See Amundsen Maturity Model Be easy to discover Be well documented API documentation Use of HTTP status codes Schemas for payloads Sample payloads Capability documentation Performance indications Rate limits (soft, hard) and resets Payload limits Warn at sensitive endpoints, payloads (PII, PHI, PFI, etc) Be easy to learn (UX, Simplicity) Be easy to consume (Simplicity) Provide end-user utilisation reporting Provide webhooks for custom actions Actions for utilisation and billing limits Actions for policy violations Scale to meet demand Allow per-customer setting of HTTP headers Support X-HTTP-METHOD-Override for misconfigured proxies CORS for web clients Set caching headers Usability Be versioned Be stateless Provide useful functionality per call to minimise round-trips See Amundsen Maturity Model Be consistent (naming conventions, parameters, errors) Provide clear error messages Support modification timestamps Support unique external identifiers Support pagination, with total set size Support filtering on multiple properties/types Support bi-directonal sorting on multiple ordered properties Be secure Use HTTPS Use access tokens Use whitelisting/blacklisting Federate to external (customer selected) IDP (e.

Visibility maturity

Simple checklists for evaluating visibility maturity

Logs Maturity is measured on a scale from 0 to 5. Each item below counts for 1 point towards the total score.

Application emits logs at runtime with appropriate log levels (DEBUG, INFO, WARNING, ERROR) Application uses a centralised logging system Application uses structured logs Application logs contain externally defined and documented diagnostic codes Application logs contain correlation identifiers for matching with other visibility data (external logs, tracing) Metrics Maturity is measured on a scale from 0 to 5.

Pipeline visualizations

Various diagrams

Note : These are not meant to represent best or even appropriate practices. Source included for ease of reuse.

Failure rates in multi-step, manual sequences

Source Data Using the Human Error Rates in a previous post. Example - github.com - Creating a pull request from a fork Process 1 - List the steps Navigate to original repository Right of the Branch mean, click New Pull Request On the Compare page, click compare across forks Confirm base-fork and base-branch Use head fork to select fork, compare branch to select branch Type a title and description for your pull request If you do not want to allow upstream edits, unselect Allow edits 2 - Classify the steps and add error rates Steps Classification Error rate 1.